Yesterday, the authorities banned another protest announced by the activist group Rouge One. This is the second time that announced protest has been banned due to “a fairly accurate prediction of violence occurring during the protest” by AuRii – a new government’s algorithm for predictive analytics. AuRii has been analysing tweets about the announced protest in the last 24 hours and its verdict seems clear – there is 86% chance of violence during the protest. Activists are appalled by, what they call, flagrant breach of their rights, calling for investigation into how AuRii makes such predictions. In addition, evidence is emerging that bots and fake accounts are responsible for violence-related tweets, pointing to an organized sabotage of Rouge One’s activities. Despite public outcry, authorities have remained adamant that they can’t disclose the inner analytical works of AuRii, as the court struggles to unpack the decision-making process in her wires.

If you haven’t heard of AuRii, don’t worry—this news is fictional. However, its premise is becoming increasingly likely as more artificial intelligence systems enter into public sphere of making decisions. In a study on Moralization in social networks and the emergence of violence during protests, using data from the 2015 Baltimore protests, researchers created an algorithm that can predict a link between tweets and street action—hours in advance of violence. Another study, by the US Army Research Laboratory, concluded that 2016 post-elections protests could have been predicted by analysing millions of Twitter posts. Such predictive analytics impacts our rights to express opinion and assemble—it can be used by police to plan for disruptive events and divert them, but also to sabotage legitimate public activism and expression, or to silence the dissent. Assemblies inherently cause disruption, but authorities have to accept them, as international standards require that protecting public order should not unnecessarily restrict peaceful assemblies. How, then, could algorithmic systems be safely used in the public domain? To what extent can findings generated from algorithms be used as evidence to restrict freedoms and rights? We learn daily about examples of existing or potential algorithmic systems being used in the public decision making, from facial recognition surveillance for the purpose of establishing “good credentials”, to automated risk calculation models on welfare and other benefits that deter activists. Mozilla Foundation and Elements AI state that a public right to an explanation already exists when an algorithmic system informs a decision that has a significant effect on a person’s rights, financial interests, personal health or well-being.

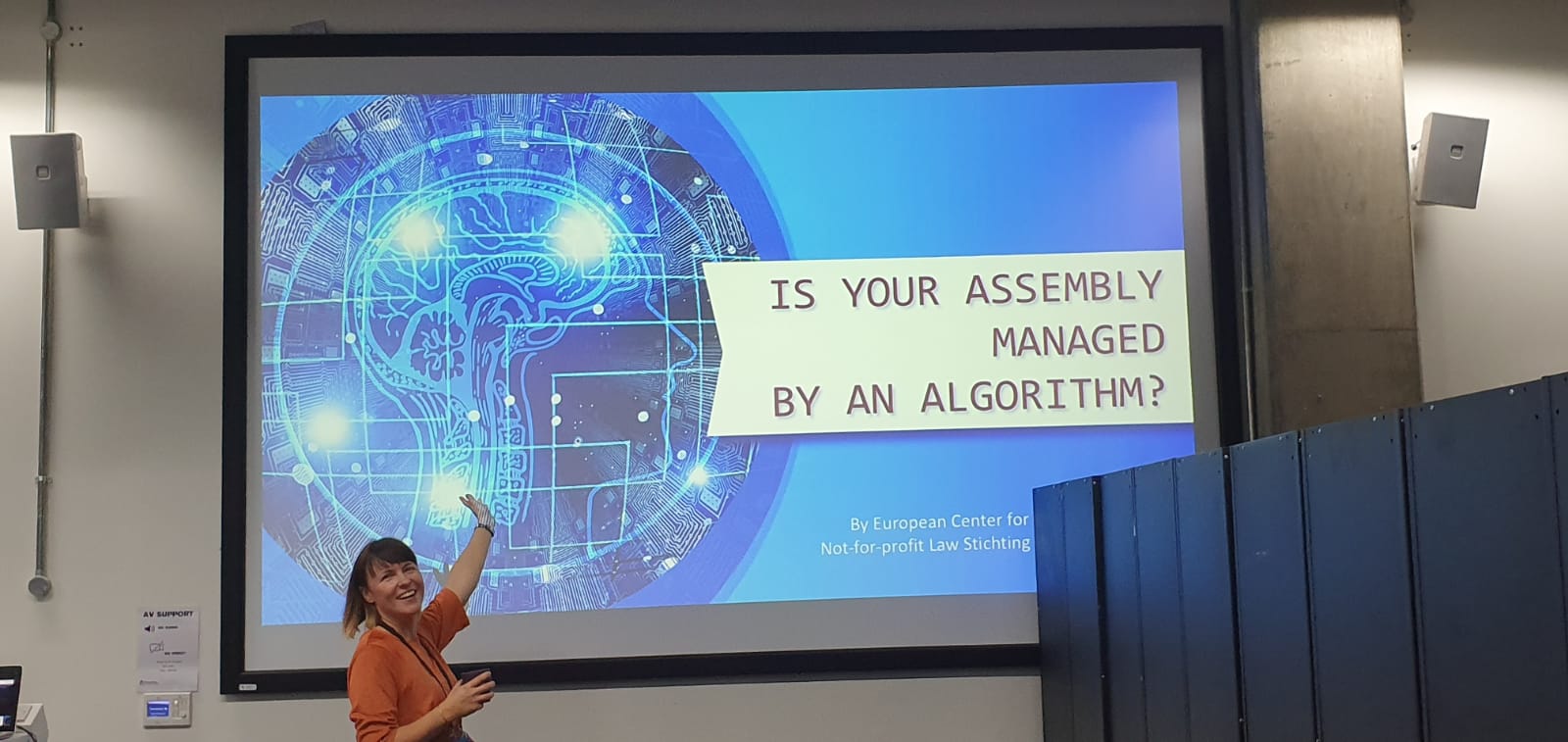

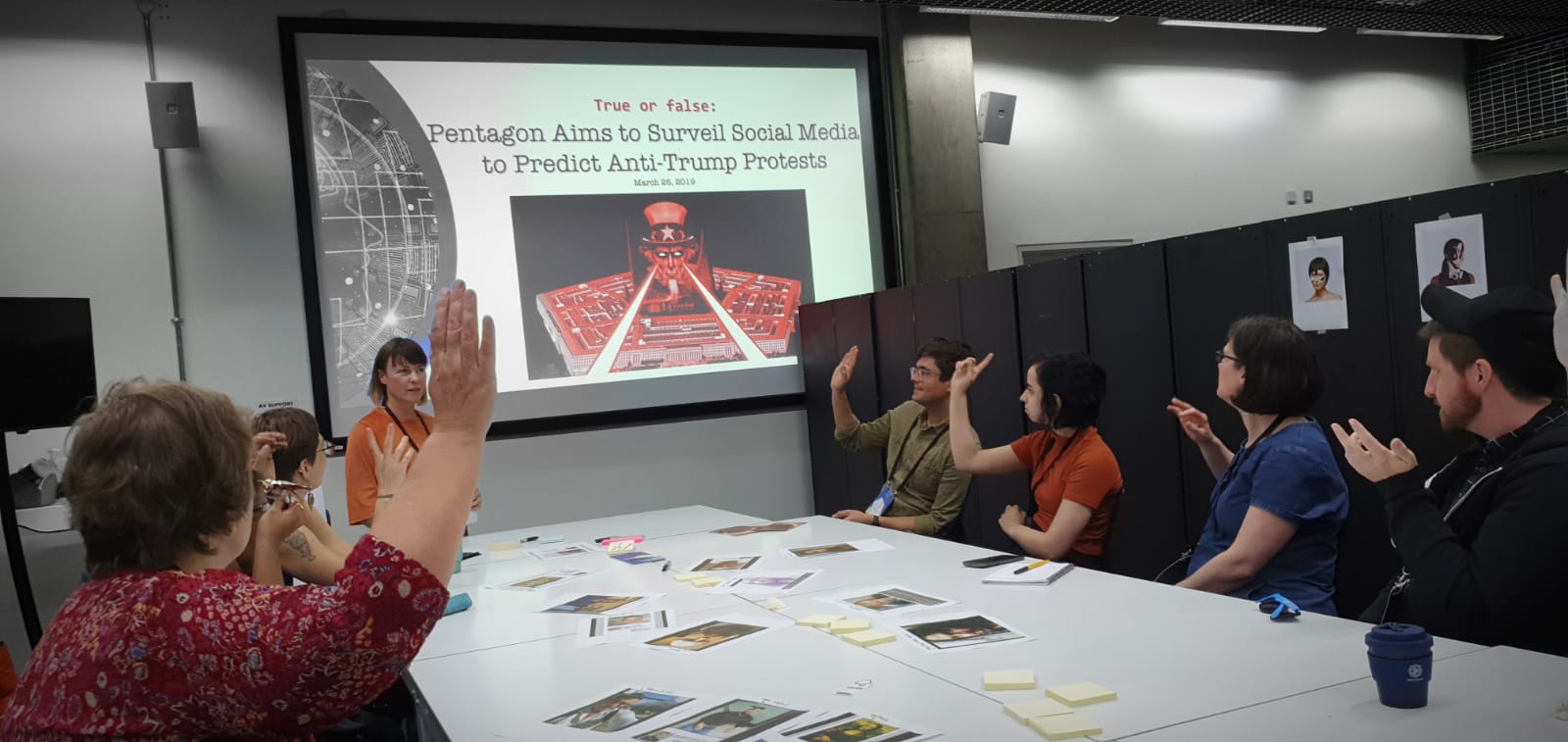

The truth is, no one knows exactly how these can impact our freedoms and rights. Council of Europe recommends appropriate legislative, regulatory and supervisory frameworks as a responsibility of states, as well as human rights impact assessments at every stage of the development, implementation and evaluation process. In addition, algorithmic systems designed by private companies for public use need to have transparency safeguards included in the terms of reference. For example, the authorities can require the source code to be made public. Researchers from the Alan Turing Institute in London and the University of Oxford call for scrutiny so THE public can see how an algorithm actually reached a decision, if those decisions affect people’s lives.  At the 2019 Mozilla Festival, ECNL hosted a discussion about the impact of algorithms on our freedom to assemble and protest with a group of lawyers, activists, technologists and academics. We agreed that a merger of knowledge, experience, ideas and peer connections by such a diverse group was the winning ticket – we need each other to complement our thinking. We propose several parallel actions, to ensure protection and promotion of our rights:

At the 2019 Mozilla Festival, ECNL hosted a discussion about the impact of algorithms on our freedom to assemble and protest with a group of lawyers, activists, technologists and academics. We agreed that a merger of knowledge, experience, ideas and peer connections by such a diverse group was the winning ticket – we need each other to complement our thinking. We propose several parallel actions, to ensure protection and promotion of our rights:

- First, to gain access into how algorithmic systems are designed and used in order to understand their existing and potential impact on rights to freedom of assembly, association, expression and participation, and explore possibilities to use them for good. This can be done, for example, by creating a crowdsourced platform on “AI systems for civic freedoms”, to channel information on ways technology and algorithmic systems are being used by governments, companies or organisations. Civic activists, developers, academics and organization could upload examples of practice when AI systems have been or could be used while impacting on basic civic freedoms. These learnings can be mapped and further researched to inform policy, advocacy actions and creating practical guidelines how to protect and promote these rights. The existing platforms, such as the OCED AI Observatory, could help facilitate such efforts.

- Second, we need to address key challenges: how to practically translate human rights into algorithmic systems design, to achieve a human rights-centred design. According to the University of Birmingham research team, this means that algorithmic systems would be human rights compliant and reflect the core values that underpin the rule of law. They proposed translating human rights norms into software design processes and system requirements, admitting that some rights will be more readily translatable (such as the right to due process, right to privacy and rights to non- discrimination), while others are likely to be difficult to “hard-wire”, such as the right to freedom of expression, conscience and of association. These difficulties are precisely why this challenge requires research and close collaboration between technical specialists and legal experts.

- Third, we need strengthening legal safeguards through policy and advocacy for nuanced legal standards and guidance on tech and human rights in international treaties and regional frameworks—efforts towards this are already unrolling on the United Nations and European level. Both Council of Europe and the European Union are determined to propose first of its kind AI regulation within a year, which could set positive benchmarks for the rest of the world, as the rules would likely be based on the existing Europe’s standards on human rights, democracy and the rule of law. Additionally, the EU aims to use access to its market as a lever to spread the EU’s approach to AI regulation across the globe. However, we need to make sure the bar is kept high and all potential AI systems with impact on human rights are included in such regulation. The experience from those can help inform other standard-setting processes as well as upcoming human rights impact assessments of the AI systems or national strategies and plans on AI. The report on Closing the Human Rights Gap in AI Governance offers a practical toolkit.

- Finally, there is an urgent need for consistent, inclusive, meaningful and transparent consultation with all stakeholders, specifically including broader civil society, human rights organisations and movements, academics, media, education institutions. In order to be effective, such efforts should include investing time and resources in bringing these stakeholders into conversations about development and implementation of national AI strategies, deployment, implementation and evaluation of impact of the AI systems, especially when used in public services, as well as free educational programs for the public on what AI is and how it can work for the benefit of societies. The public needs to understand, learn and discuss at least basic consequences of applying algorithmic systems that impact our lives.

In sum, we must develop broader knowledge-building networks and exchange learnings on practical implementation. Such actions also require overcoming traditional forms of cooperation—we must collaborate more outside our silos and across specialised fields. Indeed, that Mozilla Festival had faith that lawyers could host a tech-related session is an example of exactly that.

We thank Mozilla Festival for having faith that lawyers can host a tech-related session. MozFest 2019 session hosts: Vanja Škorić, Program Director, ECNL | Katerina Hadži-Miceva Evans, Executive Director, ECNL MozFest 2019 session discussants, in particular: Loraine Clark, University of Dundee | Juliana Novaes, researcher in law and technology | José María Serralde Ruiz, Ensamble Cine Mudo | CJ Bryan, Impact Focused Senior Product Engineer | Drew Wilson, Public Interest Computer Scientist | Extinction Rebellion activists

This blog is also featured on the Open Global Rights website.