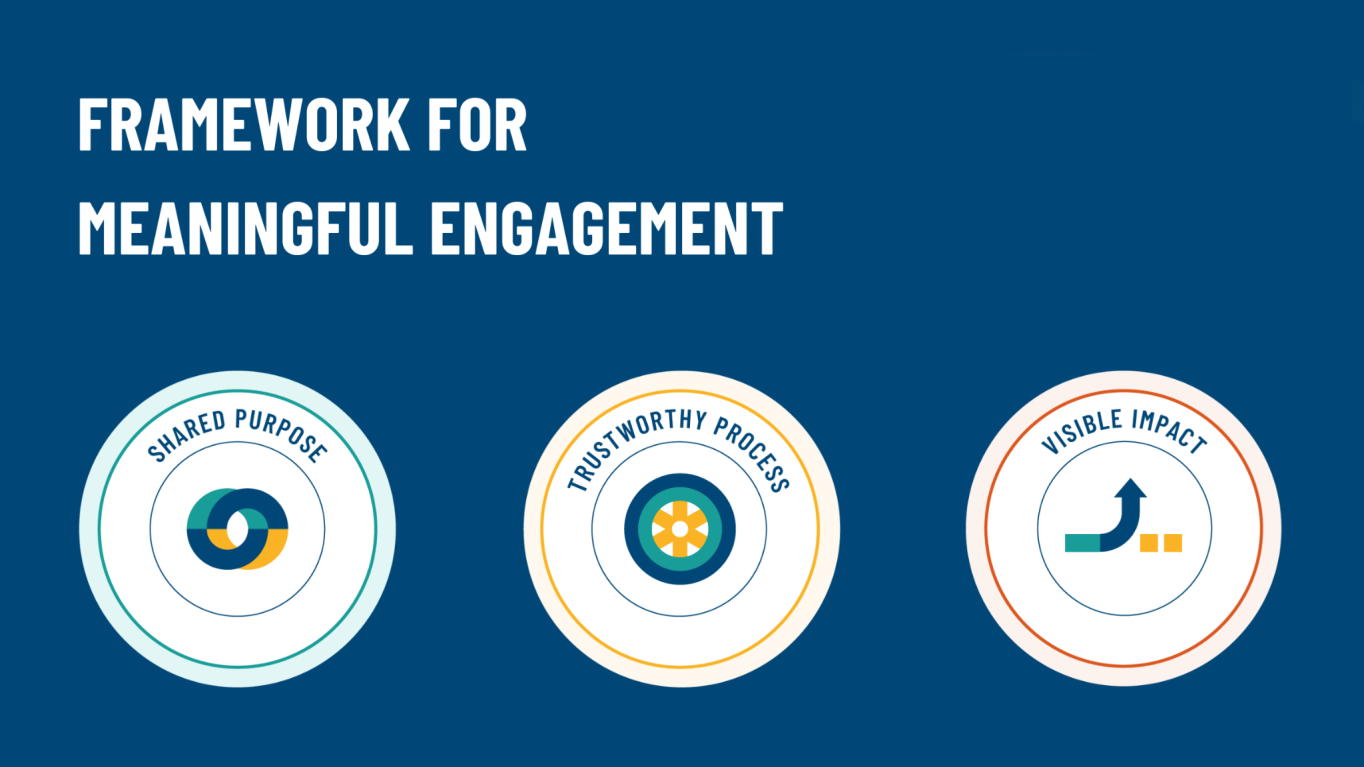

Earlier this year, ECNL with SocietyInside developed a framework for meaningfully engaging external stakeholders, especially civil society and affected communities, in developing and using AI systems as well as assessing their impacts on human rights. Our framework for meaningful engagement (FME) is based on consultations with nearly 300 diverse experts and those with lived experiences around the world. This framework is a valuable tool for digital platforms not only for including external stakeholders as they build and deploy AI systems internally, but also within their broader human rights due diligence and compliance obligations.

We are excited to announce that we are joining forces with Discord to test out the FME, thereby aiming to enhance, strengthen, and formalise Discord’s stakeholder engagement processes.

The goal of our collaboration is to pilot the relevance and usefulness of the FME by applying it practically to Discord’s real-world use of AI. The pilot will focus on the needs of Discord’s Safety ML team as they build models and integrate large language models (LLMs) in child safety content flagging, user education, and moderation.

While Discord will lead the pilot, the project is a co-creation and we are committed to working collaboratively and openly throughout the process. We are especially excited about engaging a diverse set of stakeholders globally, from experts in digital rights and AI to marginalised groups and those with lived experiences.

Our ultimate goal is to ensure that LLMs are used for content governance in a way that protects and promotes civic space and human rights.

We look forward to revising and improving the FME later this year based on practical learnings and findings from the pilot, which we hope will inform other AI companies in carrying out meaningful stakeholder engagement and taking a rights-based approach to algorithmic content governance.

Please reach out to [email protected] if you’d like to get involved in the pilot, and stay tuned for future announcements and updates!