Technology and Artificial Intelligence

Emerging technologies have transformed the way we exercise our rights and freedoms, creating both new opportunities and challenges. Yet, while civil society is both dependent on and subjected to such technologies, they are largely excluded from their development and use. As such, we have yet to explore the full potential of emerging technologies to enable civil society and strengthen civic freedoms, while preventing harm.

Newly evolving technologies, including algorithmic systems, are too often inadequate at best and harmful at worst. AI systems can indeed impact civic freedoms by targeting, surveilling, harassing, smearing, censoring, and criminalising civic space actors. This hinders people from exercising their rights and civil society from fully carrying out their activities, especially when these systems are used for authoritarian or illiberal purposes. Importantly, AI systems can exacerbate existing power dynamics, especially as civil society is already operating in an environment of rising global inequality and concentration of power due to capitalism, systemic racism, sexism, and other forms of discrimination.

At ECNL, we are alarmed that emerging technologies are often developed and deployed without adequate legal safeguards and meaningful participation of affected communities. This significantly undermines democracy, rule of law, and human rights. Some national, regional and global initiatives are exploring today how to pre-empt the negative impacts of emerging technologies on democracy and civic freedoms and attempt to create rights-based frameworks for transparency, accountability and civic engagement. However, as policy and legislative efforts to rein in technology are slowly emerging, they can also undermine existing rights, including those of marginalised groups. This is especially dangerous when AI governance is instrumentalised to expand government or corporate power. Therefore, a strong civic voice is needed to provide regulatory and policy safeguards that truly protect people’s human rights.

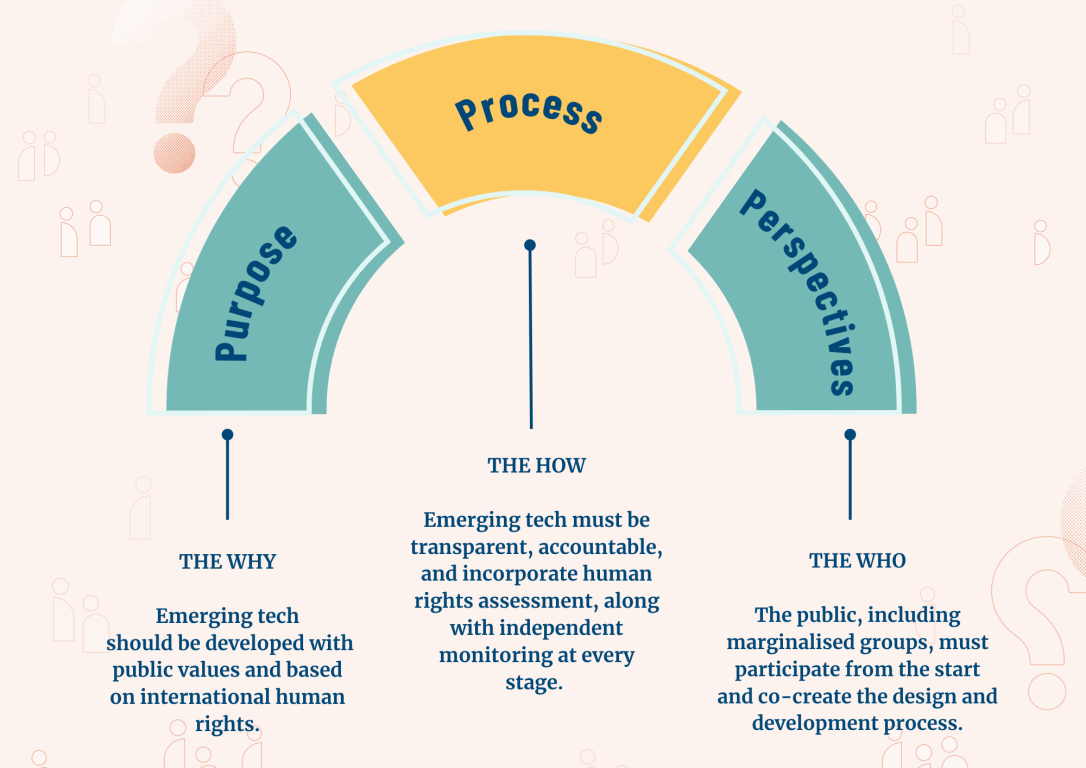

ECNL’s digital rights team’s strategy is guided by the following 3Ps; Purpose, Process and Perspectives, which we believe should underpin all technology development, use and regulation.

1. Protect and promote democratic principles and human rights standards in/through policymaking

Democratic principles and human rights standards must be preserved and promoted in a digital society. To achieve this goal, we work closely with our global network of partners so that digital-related regulation for online civic space complies fully with international human rights laws and civic freedoms. Given that many of the core frameworks from emerging technologies will have been enacted by or in 2024 (e.g., EU Digital Services Act (DSA) and AI Act, Council of Europe Convention on AI, UN Global Digital Compact), our focus is on implementing and enforcing these key legal instruments.

- We shape AI regulation and standard-setting processes on national, regional, and international levels (European Union, Council of Europe, United Nations, Economic Co-operation and Development (OECD) AI Policy Observatory and working groups). We aim to ensure strong procedural safeguards for emerging technologies, such as AI systems, through research and advocacy on issues like mandatory human right due diligence and ex ante human rights impact assessments. We also push back against blanket exemptions for national security and counterterrorism and ensure that restrictions to civic freedoms respect the principles of legitimate aim, legality, necessity, and proportionality.

- We contribute to developing guiding principles, tools, and standards within multi-stakeholder initiatives such as the Global Internet Forum to Counter Terrorism (GIFCT) or the Global Network Initiative (GNI), the Steering Committee of the Open Government Partnership (OGP), standardisation bodies and national regulation.

- We develop best practices for implementing and enforcing regulation of emerging technologies (e.g., EU DSA, EU AI Act).

2. Strengthen civic engagement and multi-stakeholder AI/tech governance

Meaningful participation is essential for inclusive and rights-based policies and regulations to be developed. That is why we encourage and support civil society broadly, especially marginalised groups, to engage more effectively, and with greater knowledge in discussions about the design, development, and deployment of emerging technologies for beneficial outcomes.

To achieve this goal, we develop and pilot support mechanisms for civil society and other relevant stakeholders’ engagement in the design, development and use of emerging technologies. We empower civil society to participate in tech policy and standard setting through knowledge and coalition building (both digital rights organisations and entities typically not focused on digital matters, encompassing European-based and international organisations, with a particular focus on Global Majority –based CSOs and marginalised groups).

- We establish best practices and guiding principles for meaningful engagement of civil society in AI governance and development.

- We collaborate with AI and emerging technology developers and deployers to strengthen civil society inclusion, especially marginalised groups.

- We build alliances with other sectors to advance responsible AI (e.g., investors, journalists, trade unions, consumer groups, etc.).

3. Expose the impacts of emerging technologies on civic space and human rights and develop harm reduction and accountability mechanisms

Conducting evidence-based research on methods to enhance civil society’s capacity to meaningfully participate in AI governance and development is key for ECNL. In addition, we support the creation and use of new tools to expand civic space (e.g., digital fundraising tools, e-participation, etc.).

- We conduct research at the intersection of human rights, civic space, and emerging technology, with a focus on digital surveillance and platforms.

- We research the human rights impacts of emerging technology for counter-terrorism measures.

- We develop procedural standards in AI policymaking and development (e.g., human rights impact assessments for AI, meaningful stakeholder engagement, etc.).

Procedural Safeguards

Human rights due diligence (HRDD), including human rights impact assessments (HRIA) for AI.

HRIAs have the potential for preventing and mitigating adverse impacts of AI systems on human rights and holding AI systems accountable. We lead advocacy to mandate ex ante HRIAs and conducts research to develop best practices for conducting such assessments.

Meaningful stakeholder engagement.

Emerging technologies need to be developed and regulated with meaningful participation of external stakeholders from diverse groups. Against the backdrop of ongoing regulation efforts for AI and growing inclusion of provisions related to stakeholder engagement – while facing the reality of 'participation washing’ – we work to develop and implement practical processes that articulate best practices for engagement, and centre marginalised groups who are most at-risk of harm from AI systems.

Transparency and remedy.

Platforms’ decisions are often inconsistent, opaque, discriminatory, and/or made by mistake. We call for transparent and inclusive internal processes, based on due process, non-discrimination, and stakeholder engagement.

Rights-based exemptions for national security/counter-terrorism.

Many governments propose to exempt all uses of AI for the purposes of national security from basic requirements and fundamental rights guarantees. We work to ensure that any development, deployment, or repurposing of tech and/or databases is done in a rights-based way.

AI governance frameworks.

We advocate for robust oversight and monitoring of AI governance that is inclusive and accountable, with clear roles and responsibilities stemming from development or deployment of AI.

Digital Surveillance and Platforms

Biometric surveillance.

Biometric surveillance - especially deployed in public spaces - impacts everyone, as it collects and processes our most personal and permanent data (biometrics). Activists and civic space actors, especially protesters in public spaces, are disproportionately impacted because they are often the target of monitoring and surveillance – and sometimes criminalisation – by governments. We work in coalition with partners globally to ban biometric surveillance in public spaces, especially facial and emotion recognition.

Personal data collection, processing and sharing.

This can enable and expand profiling and surveillance. Databases captured may also be used for other purposes than those for which they were created, without the person’s awareness or consent. We work to ensure strong oversight over actors who collect, process and share personal data, and to hold them accountable for resulting harms.

Predictive systems for law enforcement and counter-terrorism/national security agencies.

Predictive analytics-enabled government policies and practices can disadvantage certain groups, such as human rights activists, dissidents, or BIPOC (Black, Indigenous and other People of Colour) people, as “threats.” This can prevent them from exercising their basic rights and accessing services, such as banking, travel, and housing, among others. When these tools are used for migration, criminal justice or policing, risks are even more severe. We work to ensure that the developers and deployers of predictive analytics conduct ex-ante human rights impact assessments and call on bans of these for a few high-risk areas, like criminal justice.

Automated content governance (content moderation & recommender systems).

Over-broad efforts to remove content can inadvertently result in the suppression of legitimate content, thereby limiting freedom of expression, civic engagement, and activism, often disproportionately suppressing the speech of groups that are already marginalised. We work to ensure that platforms take a process-driven and human rights-based approach to content governance.

Emerging topics (e.g., generative AI, extended reality (AR/VR), decentralised platforms).

While these technologies are still at an early stage of development and adoption, there is a race to finance, build, and use them with little to no understanding of their implications on human rights and civic space. We seek to assess the related human rights impacts so that developers and deployers can take adequate harm reduction measures, policymakers can adequately regulate them, and these technologies are developed and used to promote civic freedoms.

Government requests for information and content takedown.

Governments’ access to confidential communications and other private information stored by online and telecommunications services poses a serious threat to the right to privacy. We work to ensure digital platforms have internal teams to critically assess and potentially push back against overbroad and unjustified government requests.