The use of artificial intelligence (AI) is accelerating. So is the need to ensure that AI systems are not only effective, but also fair, non-discriminatory, transparent, rights-based, accountable, and sustainable – in short, responsible. An important step for preventing and mitigating harm from AI systems is to identify and assess their impacts on human rights, so that adequate measures can be taken to address negative impacts. Human rights impact assessments need to include diverse voices, disciplines and lived experiences from a variety of external stakeholders. A key question inevitably arises: how can AI developers and deployers meaningfully engage stakeholders, so that their crucial input informs and shapes the AI impact assessment?

Three questions essentially come up:

- What makes engagement ‘meaningful’?

- What does a trustworthy engagement process look like?

- How to distinguish the meaningful from the meaningless?

In an appeal to see that the time and energy invested in the engagement leads to concrete results, both convenors (such as public institutions and private sector companies) as well as potential participants in such engagement processes increasingly expect clear answers to the above questions.

This Framework attempts to provide some answers. ECNL and SocietyInside, in collaboration with numerous contributors from all sectors, have been developing a Framework for meaningful engagement of civil society, affected communities and other external stakeholders in the context of human rights impact assessments of AI systems. Against the backdrop of ongoing regulation efforts for AI and growing inclusion of provisions related to stakeholder engagement – while facing the reality of 'participation washing’ – we believe there is a real opportunity to strengthen stakeholder’s voices. Collectively mapping out a practical process to empower genuine collaborative problem-solving can result in beneficial, human-centered and sustainable AI development.

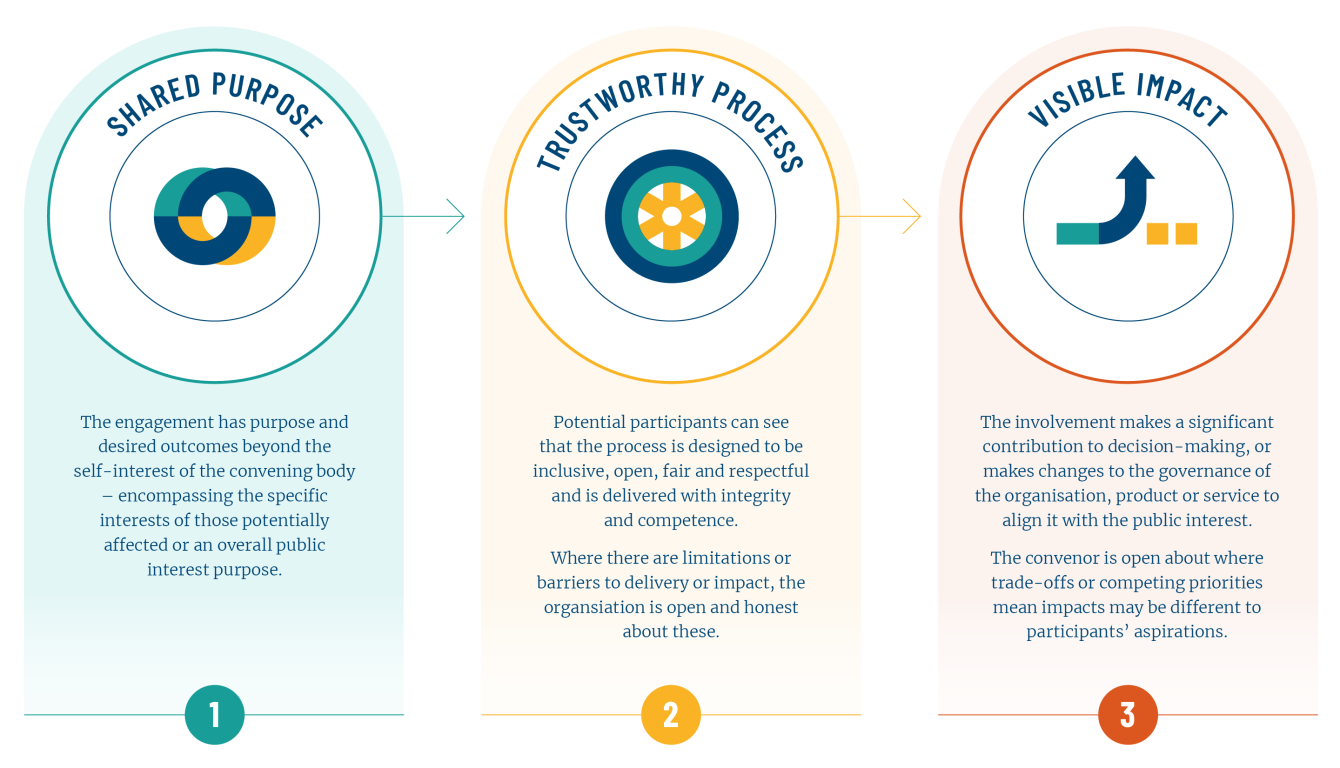

The Framework articulates minimum conditions for engagement and uses these as a baseline for involving public and civil society with companies, public entities as well as multilateral bodies during AI design and development. These conditions build on the UN Guiding Principles on Business on Human Rights (UNGPs) with the goal of embedding respect for rights and dignity for people. It seeks to improve the outreach to and quality of stakeholder engagement, with necessary emphasis on groups who are most at-risk of harm from AI systems. The Framework includes tools that are empowering and constructive to support co-creation and positive collaboration, delivering three key elements of meaningful engagement: Shared Purpose, Trustworthy Process and Visible Impact.

We have begun piloting the practical implementation and usefulness of the Framework with the City of Amsterdam, as a public body developing AI for its citizens, and with an AI-driven social media platform. The Framework has already been used to help prepare a participation plan for a specific AI development within the City of Amsterdam, building on the framework tools such as establishing a shared purpose, mapping stakeholders, prioritising and choosing engagement methods and estimating costs for engagement.

In addition, we will continue consulting civil society and other stakeholders on the content and future iterations of the Framework as a living document that evolves in parallel with the practical needs. If you are interested in providing additional input, ideas, or suggestions for a pilot implementation, please reach out to us at [email protected], on Twitter or LinkedIn or Mastodon.